Leave a Comment:

3 comments

Really enjoyed this overview and summary of the latest events, can’t wait for them to be fixed and great work to all the BPs for managing this so professionally and not letting WAX network drop during this attack.

ReplySummer has passed, tons of activities as usual, some are great... but for the last few days, there has been some issues. You might have noticed that some of the transactions that you pushed looked solid, but somehow never made it into a block and didn't reach the blockchain. Perhaps your bought an NFT, and then after realized you never got one... then you went into your accounts history and couldn't find any trace of that transaction and your WAXP tokens was still in your possession, without that NFT... and if you were really unlucky, someone else bought that NFT in the meantime.

At a later date, we aim to provide a full breakdown of what happened, why it happened and what has been done to fix it. As of now, it is still a game of actually going to the bottom of the issue and fixing the issues that allowed for this to happen. Not just the first layer of obvious symptoms, but actually digging into it deeper.

The TLDR version will be in the summary, but the information before is likely good for you to understand more about the how.

The main reason for the issues over the last few days is temporarily fixed, and a more long-term solution is being worked on. However, it has also enlightened a few other issues that exist. Some of the identified issues might just be smoke, and correlations, other might actually be things that has to be optimized either on a node operator level, in smart contract structure or even in the main blockchain code. Although the profit incentive that was being used at this time is prevented, the core issues that made it possible still exists, which is why this article won't contain much details at this stage. Instead it will explain what happened in a technical way, hopefully using language that normal people can grasp... hopefully.

Guilds, or Block Producers run many different type of nodes. They have Block Producer nodes which is the most essential node and the one that gets transactions, validates them, and put them into blocks. They perform 12 blocks per round and then it's the next Producers turn, all peered nodes then validates the previous work and the next producer in the schedule continues to execute the following 12 blocks. Each producer has a 6 seconds to verify what is received, execute transactions, sign the blocks and hand them off to the next producer in line through a network of peering nodes.

Each block can fit 200ms of CPU time, and If a lot of transactions are in the transaction queue, those that can't fit in a block remains in the queue, then are re-applied for the next block, and next and so forth. This goes on, until they are expired, or are put into a block. Each transaction has an expiration time-limit as in when it will expire. If it isn't put in a block at that time, it will be seen as an invalid transaction. This expiration time can be adjusted by the client and is there for your safety.

The cost of transactions on WAX, is not a fee, but instead you have a 24h CPU quota based on the amount of WAXP you have staked. Plus a nice bonus that is shared across all accounts of the WAXP that isn't staked at all. So you can use at least the resources you staked, but likely way more. In the cloud wallet, you also have the option to pay per transaction as you go. Great for user experience, but behind the curtains they use their account with enough stake to cover your resource requirement. Giving the perception of a fee based system, while in reality it is rather eliminating the need to lock up funds in the staked WAXP. You also have this option if you use a self-managed wallet like anchor, where their "fuel" system is based on the same idea.

At times when the chain isn't used as much, you can often transact way more than your staked resources allows. This is thanks to all the extra resources you can tap into from unstaked WAXP. For this reason you might see 200% CPU used on your account, but your might still able to push a transaction. When more usage is happening, that extra quota is going down. Nothing changed on your quota, except that you have access to less "extra" resources.

If there are aspects above you don't fully grasp, it's ok.. the concept is actually not that complicated.

- Block producer nodes adds transactions to blocks.

- When there are a lot of transactions, they leave them in the queue, and retries on the next block until that one is full, and continues to the next.

- Your transaction keeps reapplying until, either the queue is empty, or a transaction expires.

- When your transaction is accepted, your CPU time is charged.

If a block producer node does all this computing on every transaction that get pushed, it would have to do a lot of work on transactions that will be thrown in the trash. These reasons could be that the account lack resources, errors on forming the transaction or other issues.

For this reason there are nodes infront of the block producer. Those nodes has a different task.

One of those tasks can be, to validate all transactions going through that node. When validating it can check if an account has enough resources to cover the transaction. And if the account doesn't, it gets thrown the trash, and it will not get push to other nodes.

Another task a node could do is to check for errors or other issues in a transaction. Perhaps a user is trying transfer tokens to an account that doesn't exist, which wouldn't work.

If the Block Producing node would have to work with all failed transactions pushed through all nodes in the network, it would quickly be overwhelmed by just accepting transactions, and not have enough time to create the blocks. For that reason, wax node jobs are split across multiple nodes, specialized in their task. Good node operators ensure that each node is configured to be highly effective to do one task, and nothing else.

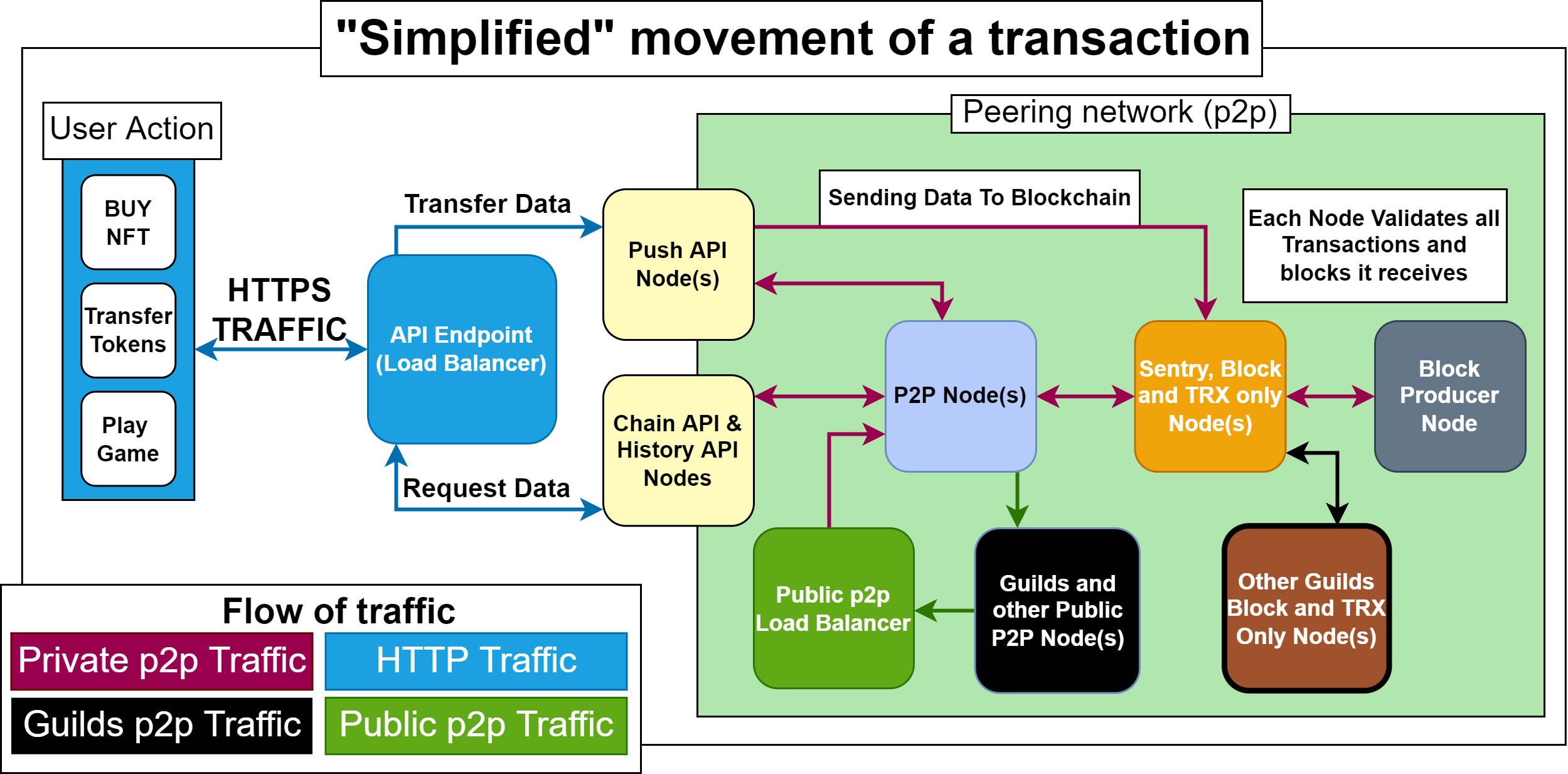

Each setup usually has a few different layers, The public layer that most users interact with is the API level, where your wallet, game, application and such can communicate with the blockchain. An API node in reality is often multiple different nodes behind a load balancer, and depending on what you want to do, your request get forwarded to the optimized node. You have nodes that are fast at reading data, and others that are made to push transactions towards the block producer through a sentry node. See example below.

Then there are also nodes that are used to communicate with other nodes, we call them peering nodes, or p2p nodes. They ensure we have a network communicating with each other. So that they can send and receive information from others. For example if you want to sync up a new node with all blocks, or start one today that reads on all new events on the blockchain, it would have to receive that information from somewhere.. That's the peering network.

So, when you try to buy your NFT on a marketplace, and you sign that transaction, you push that through the line of nodes, starting with the push-API that verifies that the action is correct, and then it travels down the line through different nodes towards the producer. Now, let's exemplify this with a real situation..

We have a new primary sale (drop) of a very sought after NFT release. There are a lot of people wanting to buy this NFT. So thousands of people push transactions to different nodes at the same time. They all first get checked by the push-API if you have enough resources to pay for it at the first stage.

1) The Push-API make an assumption based on current estimated CPU time required and available resources on your account.

2) Then pass that along to the next node, which can be configured to just forward it to the next, or validate that transaction again, perhaps in the time from when it was at the push-api to now, the accounts resources has possibly changed if one of the other transactions reached a block.

3) This might continue in a few steps, and finally it reaches the Block Producer node, in the queue to be processed, and once again, a final time, while executing the transaction, the CPU required to perform the transaction is verified while the physical CPU on the Block Producer node is performing the work.

The CPU usage on a transaction, is the actual physical time it takes for the Block Producer node to apply that transaction, the more data (NET), the longer it takes. Now we know.

Remember what I said in the start, this aint sharing all details, just the concept. The details on what actually took place will be shared at a later date. So this is mainly shared as a way to communicate the situation with the community.

Now, someone might push the same transaction through many different nodes at once. Each of them might even have unique transaction IDs, but the account can only afford to buy 1 NFT, so after the first one goes through, all the following ones will fail.

If you do this at scale, to many nodes, they might get through all stages and require to be processed by the Block Producer Node, where all except the first one fail. Now, for this user, it might increase the chance of getting their transaction processed quickly, and by that receive the NFT they are after.

But imagine that this is done at scale, and that through many bigger actions than just buying an NFT. The transaction might have actions triggered by one action, which takes a while to process. E.g. your send a NFT pack with 50 NFTs and open it, you have two actions in the transaction, one is a transfer of the pack, and the second is an action to unbox that pack. This unboxing triggers the creation or transfer of 50 NFTs into your account. Which takes longer to process for the Block Producer than a simple transfer of tokens. That might start to add up on the transaction queue... mix that in with a lot of normal transaction, and other features, and those 200ms blocks get filled by a few very big transactions based on their long execution time, and not enough normal transactions get put into blocks.. Allowing them to build up more and more, and start to expire.

When that happens, your transaction took so long to get from queue to block, that it simply got throw in the bin. Which resulted in you getting a valid transaction as response from the API node when you pushed that buy button, but after a while, it actually never reached a block. And that is why your transaction isn't showing on your history, and why that NFT isn't in your inventory.

Transactions are applied in order, and simply spamming transactions to nodes would not really create the situation we saw now. That is, if they are properly configured. In front of the API node, you find protection against spam, and then each layer of nodes validate transactions and get rid of a lot of further spam, until the Block Producer get the cleanest list of transactions. A team that has less optimized setup might have a harder time to handle spam of different types than others. And some sophisticated spamming has been seen before. This is one example that has been seen before on EOSIO (On the EOS Network), but has been patched. The TLDR of the patched issue is creating a loop using deferred trx that exhausts the CPU of the Block Producers to make it incapable of putting any transactions into any blocks at all.

The TLDR will be below.

Hopefully this complicated and rather technical breakdown of the current situation can help alleviate your worries on what is happening. The main reason for the delays has been patched. The entity performing these actions is showing a deep knowledge in how the node operator setup works, how the blockchain functions work, and what to do to take advantage of that.

Basically, you can see it as the Block Producer nodes got 'DDOS' attacked from multiple angles. Combined with a high rate of normal usage of WAX, and their well timed transactions. They managed to overload the Block Producer nodes by building up a huge queue of transactions, and the producers lacking the time to process all of them, resulting in valid transactions expiring. I use DDOS lightly in this example, probably "overloaded" is a better term, as we can't be sure of the intention behind the actions, just the profit incentive that caused it. And that it was clearly affecting users transactions.

Now, this is being patched, and this actually will lead to better setups across all guilds, as some setups managed the situation better than others.

So, a nice test, it didn't stop the chain, but it negatively affected users, and it will result in a stronger ecosystem. We have spent most of the last few days diving into the different sides of this situation and as a guild collective learnt a lot.

If you are a nerd and want a more full breakdown of the layout of the Nodes, you find that below.

Click on above image for bigger and better quality.

Really enjoyed this overview and summary of the latest events, can’t wait for them to be fixed and great work to all the BPs for managing this so professionally and not letting WAX network drop during this attack.

Reply